Stats modeling the world 5th edition – As “Stats Modeling the World, 5th Edition” takes center stage, this opening passage beckons readers into a world crafted with academic rigor and authoritative tone, ensuring a reading experience that is both absorbing and distinctly original.

This comprehensive guide delves into the intricacies of statistical modeling, empowering readers with the knowledge and skills to navigate the complexities of data analysis and interpretation. Prepare to embark on an intellectual journey that will illuminate the power of statistics in shaping our understanding of the world around us.

Data Exploration and Statistical Modeling

Data exploration is a crucial step in statistical modeling as it provides insights into the underlying structure and characteristics of the data. It helps identify patterns, outliers, and potential relationships that can inform the modeling process.

Stats Modeling the World 5th Edition employs various data exploration techniques, including:

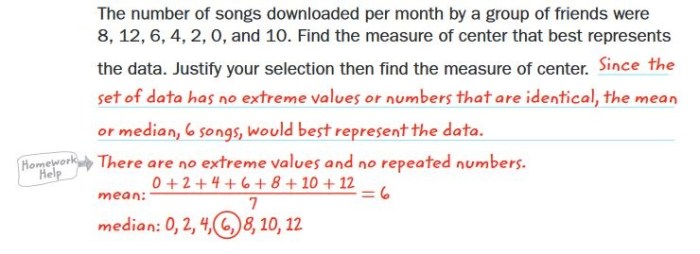

Descriptive Statistics

- Summarizing data using measures like mean, median, standard deviation, and range.

- Identifying patterns and trends through histograms, scatterplots, and box plots.

Data Cleaning and Preparation

Data cleaning and preparation are essential for ensuring data quality and accuracy. This involves:

- Handling missing values through imputation or deletion.

- Dealing with outliers by identifying and addressing extreme values.

li>Transforming data to improve normality or linearity.

Probability and Statistical Inference

Probability and statistical inference are fundamental concepts in statistics that allow us to make predictions and draw conclusions from data. Probability theory provides a mathematical framework for quantifying the likelihood of events, while statistical inference uses probability to make inferences about a population based on a sample.

Fundamental Concepts of Probability Theory

Probability theory is based on the concept of a random variable, which is a variable that can take on different values with known probabilities. The probability of an event is defined as the proportion of times the event occurs in a large number of trials.

The probability of an event can range from 0 (impossible) to 1 (certain).

Types of Probability Distributions

There are many different types of probability distributions, each with its own unique characteristics. Some of the most common distributions include the binomial distribution, the normal distribution, and the Poisson distribution. Each distribution is used to model different types of data, and the choice of distribution depends on the specific problem being investigated.

Principles of Statistical Inference

Statistical inference is the process of using probability to make inferences about a population based on a sample. The two most common methods of statistical inference are hypothesis testing and confidence intervals.

Hypothesis testing is a statistical method used to determine whether there is sufficient evidence to reject a null hypothesis. The null hypothesis is a statement that there is no difference between two groups or that a particular parameter has a certain value.

If the p-value (the probability of obtaining the observed results if the null hypothesis is true) is less than the significance level (usually 0.05), then the null hypothesis is rejected.

Confidence intervals are a statistical method used to estimate the true value of a population parameter. A confidence interval is a range of values that is likely to contain the true value of the parameter. The confidence level is the probability that the true value of the parameter is within the confidence interval.

Regression Analysis

Regression analysis is a statistical method used to model the relationship between a dependent variable and one or more independent variables. It is widely used in various fields to make predictions, identify patterns, and draw inferences from data.

The most common type of regression model is linear regression, which assumes a linear relationship between the dependent and independent variables. Other types of regression models include polynomial regression, logistic regression, and nonlinear regression, which can capture more complex relationships.

Assumptions of Linear Regression

- Linearity:The relationship between the dependent and independent variables is linear.

- Independence:The observations in the data set are independent of each other.

- Normality:The residuals (errors) are normally distributed.

- Homoscedasticity:The variance of the residuals is constant across all values of the independent variables.

Limitations of Linear Regression

- Non-linear relationships:Linear regression cannot capture non-linear relationships between the dependent and independent variables.

- Outliers:Outliers can significantly affect the results of linear regression.

- Multicollinearity:When two or more independent variables are highly correlated, it can lead to unstable regression coefficients.

Model Selection and Evaluation

Model selection and evaluation are important steps in regression analysis. Various techniques can be used to select the best model, including:

- R-squared:Measures the proportion of variance in the dependent variable that is explained by the model.

- Adjusted R-squared:A modified version of R-squared that adjusts for the number of independent variables in the model.

- Cross-validation:A technique used to evaluate the performance of a model on unseen data.

Time Series Analysis

Time series analysis is a statistical technique used to analyze data that is collected over time. Time series data has several unique characteristics that distinguish it from other types of data. First, time series data is often non-stationary, meaning that its mean and variance change over time.

Second, time series data is often autocorrelated, meaning that the value of a data point at time t is correlated with the values of data points at previous times.There are many different types of time series models that can be used to analyze time series data.

The most common type of time series model is the autoregressive integrated moving average (ARIMA) model. ARIMA models are used to forecast future values of a time series by taking into account the past values of the time series and the errors in the past forecasts.Time

series forecasting is the process of using a time series model to predict future values of a time series. Time series forecasting can be used for a variety of purposes, such as planning for future demand, forecasting economic indicators, and predicting weather patterns.

Analysis of Variance (ANOVA)

ANOVA is a statistical method used to compare the means of two or more groups. It is based on the principle that if the means of the groups are equal, then the variances of the groups will also be equal.

However, if the means of the groups are not equal, then the variances of the groups will also be different.ANOVA can be used to test a variety of hypotheses, including:* Whether the means of two or more groups are equal

- Whether the means of two or more groups are different

- Whether the means of two or more groups are equal to a specified value

Types of ANOVA Models

There are two main types of ANOVA models:* One-way ANOVA: This model is used to compare the means of two or more groups that have been randomly assigned to a single independent variable.

Two-way ANOVA

This model is used to compare the means of two or more groups that have been randomly assigned to two independent variables.

Applications of ANOVA in Hypothesis Testing

ANOVA is a powerful tool for hypothesis testing. It can be used to test a variety of hypotheses about the means of groups. ANOVA is often used in research studies to test hypotheses about the effects of different treatments or interventions.

Nonparametric Statistics

Nonparametric statistics, also known as distribution-free statistics, are statistical methods that do not make assumptions about the underlying distribution of the data. They are used when the data does not meet the assumptions of parametric tests, such as normality, homogeneity of variances, or independence of observations.

Nonparametric tests are based on the ranks of the data rather than the actual values. This makes them less sensitive to outliers and extreme values than parametric tests.

Types of Nonparametric Tests

There are many different types of nonparametric tests, each designed to test a different hypothesis. Some of the most common nonparametric tests include:

- The Mann-Whitney U test is used to compare two independent groups.

- The Kruskal-Wallis test is used to compare more than two independent groups.

- The Wilcoxon signed-rank test is used to compare two related groups.

- The Friedman test is used to compare more than two related groups.

Advantages and Disadvantages of Nonparametric Tests, Stats modeling the world 5th edition

Nonparametric tests have several advantages over parametric tests:

- They do not require the data to be normally distributed.

- They are less sensitive to outliers and extreme values.

- They are often easier to compute than parametric tests.

However, nonparametric tests also have some disadvantages:

- They can be less powerful than parametric tests when the data is normally distributed.

- They can be more difficult to interpret than parametric tests.

Case Studies and Applications: Stats Modeling The World 5th Edition

Statistical modeling finds widespread applications across diverse fields, providing valuable insights and aiding decision-making processes.

Real-world examples of statistical modeling applications include:

- Predicting sales trends and forecasting demand in marketing and finance.

- Assessing the effectiveness of medical treatments in clinical research.

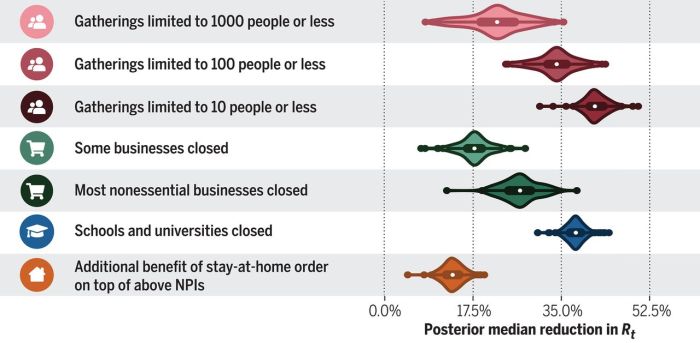

- Modeling the spread of infectious diseases in epidemiology.

- Analyzing customer behavior and preferences in market research.

- Evaluating the impact of environmental factors on crop yields in agriculture.

Challenges and Limitations

Despite its power, statistical modeling faces certain challenges and limitations:

- Data quality and availability: The accuracy and reliability of statistical models depend on the quality of the data used.

- Model selection and validation: Choosing the appropriate statistical model and validating its performance can be complex and time-consuming.

- Assumptions and limitations: Statistical models often rely on certain assumptions, and their validity should be carefully assessed.

- Interpretability and communication: Communicating the results of statistical models to non-statisticians can be challenging.

Solving Real-World Problems

Statistical modeling plays a crucial role in solving real-world problems by providing:

- Data-driven insights: Statistical models extract meaningful patterns and relationships from data, aiding in decision-making.

- Predictions and forecasts: Models can predict future outcomes based on historical data, enabling businesses and organizations to plan effectively.

- Risk assessment and mitigation: Statistical models help assess risks and identify potential threats, allowing for proactive measures.

- Hypothesis testing and evaluation: Models facilitate the testing of hypotheses and the evaluation of interventions, contributing to scientific research and evidence-based decision-making.

Data Visualization

Data visualization is a powerful tool that can help us to understand and communicate data. It allows us to see patterns and trends in data that would be difficult or impossible to detect with the naked eye. Data visualization can also help us to identify outliers and errors in data.

There are many different types of data visualization techniques, each with its own strengths and weaknesses. Some of the most common types of data visualization techniques include:

- Bar charts: Bar charts are used to compare the values of different categories. They are simple to create and easy to understand.

- Line charts: Line charts are used to show how a value changes over time. They can be used to identify trends and patterns in data.

- Scatterplots: Scatterplots are used to show the relationship between two variables. They can be used to identify correlations and outliers in data.

- Histograms: Histograms are used to show the distribution of a variable. They can be used to identify the mean, median, and mode of a variable.

- Box plots: Box plots are used to show the distribution of a variable and identify outliers. They can be used to compare the distributions of different groups of data.

Data visualization can be used for a variety of purposes in statistical modeling. It can be used to:

- Explore data: Data visualization can help us to explore data and identify patterns and trends. This can help us to develop hypotheses and models.

- Interpret models: Data visualization can help us to interpret models and understand how they work. This can help us to make better predictions and decisions.

- Communicate results: Data visualization can help us to communicate the results of our analyses to others. This can help us to persuade others of our findings and get them to take action.

Statistical Computing

Statistical computing plays a pivotal role in statistical modeling by providing a computational environment for data analysis and modeling. It facilitates the execution of complex statistical methods and enables researchers to handle large datasets efficiently.Statistical software packages come in various types, each tailored to specific needs and expertise levels.

Some popular categories include:

-

-*General-purpose statistical packages (e.g., R, SAS, SPSS)

Offer a comprehensive range of statistical functions, including data management, analysis, and visualization.

-*Specialized statistical packages (e.g., Stata, Minitab)

Designed for specific domains such as econometrics, biostatistics, or social sciences, providing tailored tools and functionalities.

-*Open-source statistical packages (e.g., R, Python)

Freely available and customizable, allowing users to create and share custom functions and packages.

The advantages of using statistical software include:

-

-*Efficiency

Automates complex calculations and repetitive tasks, saving time and effort.

-*Accuracy

Reduces the risk of human error and ensures consistent and reliable results.

-*Flexibility

Allows users to explore different statistical methods and customize analyses to suit their specific research needs.

However, it is important to consider the potential disadvantages as well:

-

-*Cost

Commercial statistical software can be expensive, especially for large organizations or institutions.

-*Learning curve

Statistical software requires a certain level of technical proficiency, and mastering its functionalities can take time.

-*Limited flexibility

Some statistical software may not offer the flexibility to handle highly specialized or complex analyses, necessitating the use of specialized software or custom coding.

Ethical Considerations in Statistical Modeling

Statistical modeling involves the use of data and statistical techniques to make predictions and draw conclusions. It plays a crucial role in various fields, including science, medicine, and business. However, it is essential to consider the ethical implications associated with statistical modeling to ensure its responsible and ethical use.

One of the primary ethical considerations is transparency and reproducibility. Researchers must disclose the methods and data used in their statistical models to allow others to replicate and verify the results. This promotes scientific integrity and enables the detection of any potential errors or biases.

Potential Biases and Limitations

Statistical models can be subject to various biases and limitations. For example, sampling bias can occur when the sample used to build the model is not representative of the population of interest. Model bias can arise when the model’s assumptions do not fully capture the underlying data-generating process.

It is crucial to be aware of these potential biases and limitations and take steps to mitigate their impact on the model’s accuracy and reliability.

Another ethical concern is the potential misuse of statistical models. Models can be used to manipulate data or present misleading conclusions, which can have severe consequences. Therefore, it is essential for statisticians and researchers to use statistical models responsibly and avoid engaging in unethical practices.

FAQs

What is the significance of data exploration in statistical modeling?

Data exploration plays a crucial role in statistical modeling as it helps identify patterns, trends, and potential outliers in the data. This process allows researchers to gain insights into the data, refine their modeling assumptions, and make informed decisions about the appropriate statistical methods to use.

What are the different types of probability distributions and their applications?

Probability distributions are mathematical functions that describe the likelihood of different outcomes occurring. Common probability distributions include the normal distribution, binomial distribution, and Poisson distribution. Each distribution has specific characteristics and applications in various fields, such as finance, engineering, and social sciences.

What are the key assumptions of linear regression models?

Linear regression models assume that the relationship between the independent and dependent variables is linear, the residuals are normally distributed, and there is no autocorrelation or heteroscedasticity in the data. These assumptions are crucial for ensuring the validity and accuracy of the model’s predictions.

What are the advantages of using nonparametric tests?

Nonparametric tests are advantageous when the data does not meet the assumptions of parametric tests, such as normality or equal variances. They are also less sensitive to outliers and can be used with smaller sample sizes, making them a valuable tool in certain situations.

What is the role of statistical software in statistical modeling?

Statistical software packages, such as R, SAS, and SPSS, provide powerful tools for data analysis and statistical modeling. They automate complex calculations, enable data visualization, and offer a wide range of statistical methods and functions. Statistical software enhances efficiency, accuracy, and reproducibility in statistical modeling.